Docker containers are awesome. We can containerize many applications. In the earlier blog series, we have learnt about docker basic here, We understood the architecture & basic commands here. We also learnt how we can dockerize a sample application in this blog. However, in real life, we have applications made up of many components, either on same host or different hosts. These components communicate with each other. We need docker containers to talk to other systems. We also want docker containers to be able to listen to other systems. In this blog, we will explore the concept of Docker networking.

Container Network Model(CNM)

The Networking in Docker is made available using the CNM. This is an abstraction for enabling support for different types of networks in docker.

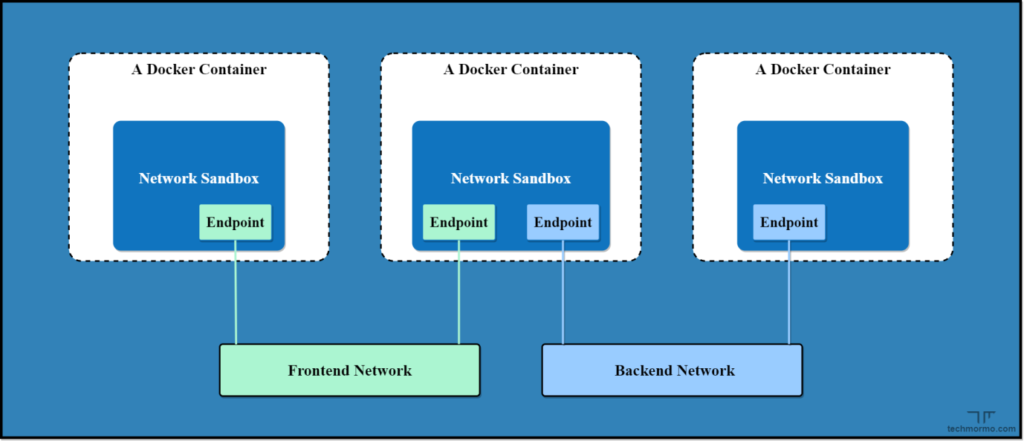

In simple words, if we want to build a house, we first prepare a blue-print. We then give it to the builder who refers the blue-print and builds the house for us. Similarly, the CNM acts as a blue-print for networking configurations. CNM has 3 main components: Sandbox, Endpoint and Network.

SANDBOX

Sandbox is the component that contains the configuration of a container’s network stack, e.g.: information pertaining to the management of the container’s network interfaces, DNS settings, route tables, etc. A Sandbox may contain many endpoints from multiple Networks.

Endpoint

This component is responsible for connecting a Sandbox to a Network. Endpoint belongs to only one network and connects only one sandbox.

Network

So, Network is basically a group of Endpoints that are capable of communicating with each other.

Network drivers

In actual terms, Network drivers are pluggable interfaces that provide the actual network implementations for Docker Containers.

In other words, if CNM is the blueprint, network drivers are the actual building that is being built. Docker comes up with default set of Network drivers. It also provides capability for the user to create their own network drivers

The following are the default network drivers provided by the docker:

- none

- host

- bridge

- overlay

- ipvlan

- macvlan

Let us try to explore them in details

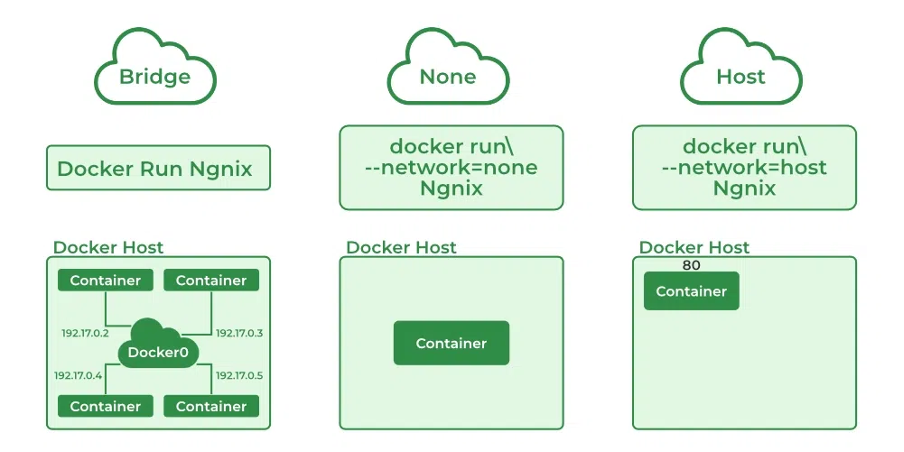

None

When a docker driver is associated with a “None” driver, it simply means that networking is disabled for this container. The container becomes an isolated container.

We specify the network driver for the docker container using the “—network” parameter for the docker run command. Hence, to specify the “None” network driver for an nginx container, we can use the following snippet

docker run -d --network none nginxHost

When we specify the “host” network driver for a container, the container shares the networking configuration of the host itself. In other words, it appears that the container and the host are the same, from networking point of view. This means that the container and the host will share the same ports. Every container has its own set of ports that is exposed. You can know which port of the container is exposed using the following snippet:

docker inspect <container_name>Now when you attach the “host” network driver while running the container, the exposed port of the container gets attached to the same port of the host.

To specify the “Host” network driver for an nginx container, we can use the following snippet:

docker run -d --network host nginxThis way, we do not need to worry about assigning a host port to our container. The disadvantage of this network driver is that we cannot run multiple containers with same “exposed port” in a single host using the “host” network driver.

Bridge

This driver tells the Docker to create an internal network within the host. We can have multiple containers within a single network by specifying them to have the “bridge” network driver. These multiple containers can directly communicate with each other but isolate themselves from applications or containers that do not belong to this particular network driver.

To specify the “Host” network driver for an nginx container, we can use the following snippet:

docker run -d --network bridge nginxThis is the default network driver of the containers. When no network driver is specified, the container is added to the default bridge network.

Overlay

The overlay driver tells the Docker to create a distributed network that exists in multiple Docker hosts.

To specify the “Overlay” network driver for an nginx container, we can use the following snippet:

docker run -d --network overlay nginxipvlan

IPvLan is a docker network driver that offers control over the IP addresses, specifically over IPv4 and IPv6 addresses. It also offers control over layer 2 and layer 3 VLAN tagging and routing. This network driver is highly beneficial when one needs to integrate containerized services with an existing network. This offers performance benefits over bridge based networking. Hence it can be used in cases where network performance is critical.

macvlan

macvlan is another advanced option that enables containers to appear as physical devices on your network. It works by assigning each container in the network a unique MAC address.

To use this network type, you must dedicate one of your host’s physical interfaces to the virtual network. An active Docker host running many containers must appropriately configure the wider network to support the potentially large number of MAC addresses.

Docker create

We can create our own network using the create command.

docker network create –driver host <network_name>Connecting to the network

We can add a docker container into a network using the connect command as follows

docker network connect <network_name> <container_name>Details of a network

We can find the details of a docker network using the following command

docker network inspect <network_name>Conclusion

In this blog we have explored the key concepts in Docker networking. With this , we can simplify lot of real time project constructs pertaining to networking when we containerise the applications.

Bridge network driver is the default driver for the docker containers. For most of the cases, this will be the most suitable driver. This enables the docker containers to communicate with each other using their own addresses. The containers can also access the host network.

Host networks bind the ports of the containers to the host ports with similar number. This gives them a behaviour that is similar when the application is installed directly on the host instead of the container. However, when you have multiple docker containers with same exposed ports , we cannot make use of this network driver.

When we have containers on different docker hosts and there is a need for these containers to communicate with each other, we make use of the Overlay network driver. This lets us setup our own distributed environment for our containers. This guarantees high availability.

In cases where the network of the host needs to show containers as physical devices, Macvlan networks enable this functionality. For example, when an application’s container is used to monitor network traffic.

IPvLAN networks need to be used in special cases where there is a very specific advanced requirements with regards to container IP addresses, tags, and routing.

Docker also supports third-party network plugins, which expand the networking system with additional operating modes. These include Kuryr, which implements networking using OpenStack Neutron, and Weave, an overlay network with an emphasis on service discovery, security, and fault tolerance.

Finally, Docker networking is always optional at the container level: setting a container’s network to none will completely disable its networking stack. The container will be unable to reach its neighbors, your host’s services, or the internet. This helps improve security by sandboxing applications that aren’t expected to require connectivity.